Criminals are using AI voice cloning for cyber frauds

Artificial Intelligence (AI) is enabling scammers to clone voices, even those of friends and family, leading to an increase in fraudulent activities.

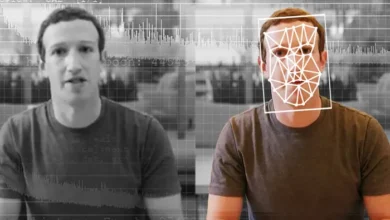

You may have seen memes on social media where content creators use AI voice cloning to make famous people appear to do or say things they normally wouldn’t – like making politicians sing popular songs or having celebrities make funny statements. While these voice-cloned memes are sometimes intended humorously, cybercriminals have found a treasure trove in these same AI voice-cloning apps and are using them to defraud, deceive individuals and commit financial frauds.

Spurt in Voice Cloning Fraud Across India

In recent months, police stations across India have reported a spate of complaints where scammers used voice-mimicking software to impersonate acquaintances and extract money.

In one shocking case in Bhopal, a cyber fraudster cloned the voice of a victim’s brother using an AI voice synthesizer. The criminal called the victim pretending to be his brother, claimed their mother was seriously ill and needed urgent hospitalization. Deceived by the urgency in the voice that sounded just like his brother, the victim immediately transferred Rs 4.75 lakh to the account shared by the scammer. Later, when the victim called his actual brother, he realized he had been defrauded.

AI Advancements Make Cloning Incredibly Easy

Recent advancements in AI and generative modelling have made it extremely easy to clone voices, even with limited voice data. AI voice cloning apps just need about some minutes of audio recording to produce a digital voice model that can mimic the tone, pitch, cadence and other vocal attributes of the target person. Advance AI voice Cloning tools like Elevenlabs and others are being used by criminal for this purpose.

These apps can generate highly realistic synthetic voices that capture the nuances and emotional expressions of the original voice. The resulting cloned voice is difficult to distinguish from the real thing even by close friends or family members.

Cloned Voices Used in Elaborate Ruses

Armed with cloned voices of their targets, criminals weave elaborate ruses to convince victims and extract money or sensitive information. Some common tactics include:

- Faking an emergency or accident involving a friend or family member who then “calls” the victim asking for urgent financial assistance.

- Impersonating a bank officer or tax authority official needing immediate payment of dues.

- Spinning a sob story pretending to be a poor acquaintance in dire straits.

- Extracting personal information by posing as close friends.

AI Makes Social Engineering frauds Easier

The ability to clone voices with AI has disturbing implications for social engineering crimes. Social engineers manipulate human psychology and emotions to gain trust and extract sensitive information.

Historically, hacking required technical exploits to break into secure systems, making machines the weak link. However, with advancements like AI voice cloning, humans and their susceptibility to manipulation are now emerging as the most vulnerable target for criminals.

AI-enabled voice cloning exponentially increases the options for criminals to fabricate intricate social engineering scams. For example, fraudsters could impersonate a victim’s best friend or a trusted authority figure to instill a false sense of security.

They could then exploit this trust to obtain personal data, account access, or passwords that enable identity thefts and financial frauds. Experts warn that such AI-powered scams targeting human vulnerabilities will become a growing menace. Maintaining vigilance and verifying unsolicited communications through secondary channels will be critical to thwart social engineering schemes made more potent by AI voice cloning.

Numerous Cases of Voice Cloning Frauds

In April 2023, a woman named Jennifer DeStefano from USA was the victim of an AI voice cloning scam in which criminals used technology to mimic the voice of her 15-year-old daughter, Briana, in a fake kidnapping attempt. The scammers initially demanded a $1 million dollar ransom, later lowered to $50,000, while imitating Briana’s voice crying for help. DeStefano realized it was a scam and stalled for time until she could contact authorities. The fraud was exposed when the real Briana called to say she was safe, unaware of the situation.

In 2019, scammers cloned the voice of a CEO in the UK to fraudulently get an employee to transfer €220,000 to a supplier account controlled by the criminals.

In Haryana, a man was defrauded of Rs 30,000 when a scamster impersonated his friend using AI voice cloning, claiming to need money for hospitalization after an accident. A similar incident occurred in Shimla, where a man lost two lakh rupees as fraudsters mimicked his uncle’s voice.

In Bhopal, a total of 159 voice cloning fraud cases were reported in 2023 until August, with cumulative losses amounting to Rs 43.57 lakh as per police reports.

Similar cases have been reported from various parts of the country where AI tools are used to manipulate videos, creating convincing but fake content. These fraudulent videos make it appear as if the person in them is genuine when, in reality, the content is manipulated.

How Criminals Create Voice Clones for Fraud

The typical process followed by criminals involves:

- First gathering voice data of targets from public social media posts or recording calls.

- Feeding this audio data into AI voice cloning apps to produce a digital model that mimics the vocal mannerisms.

- Using the cloned voice model to make scam calls mimicking acquaintances of the victim.

- Spinning fictitious scenarios and urgent requests to manipulate victims into complying.

Safety Tips to Avoid Being Defrauded

To stay safe from voice cloning scams, experts recommend:

- Being cautious of unexpected calls demanding money and independently verifying identity.

- Listening closely for unnatural gaps or tone in conversations that signal an AI voice clone.

- Avoiding publicly sharing audio clips that can be used to clone your voice.

- Using call recording apps so evidence is available if targeted by scammers.

- Reporting any suspicious calls to cyber crime authorities immediately.

- Be cautious while talking to calls from unknown numbers, they can record your voice and clone it using AI.

The rapid evolution of AI will continue empowering criminals with more potent tools. By being vigilant and following safety tips, potential victims can protect themselves from emerging sophisticated AI-enabled frauds.

Please, also have a look into: G20 summit cybersecurity alert: Indonesian hackers target India’s DGT.GOV.IN in code red attack